-

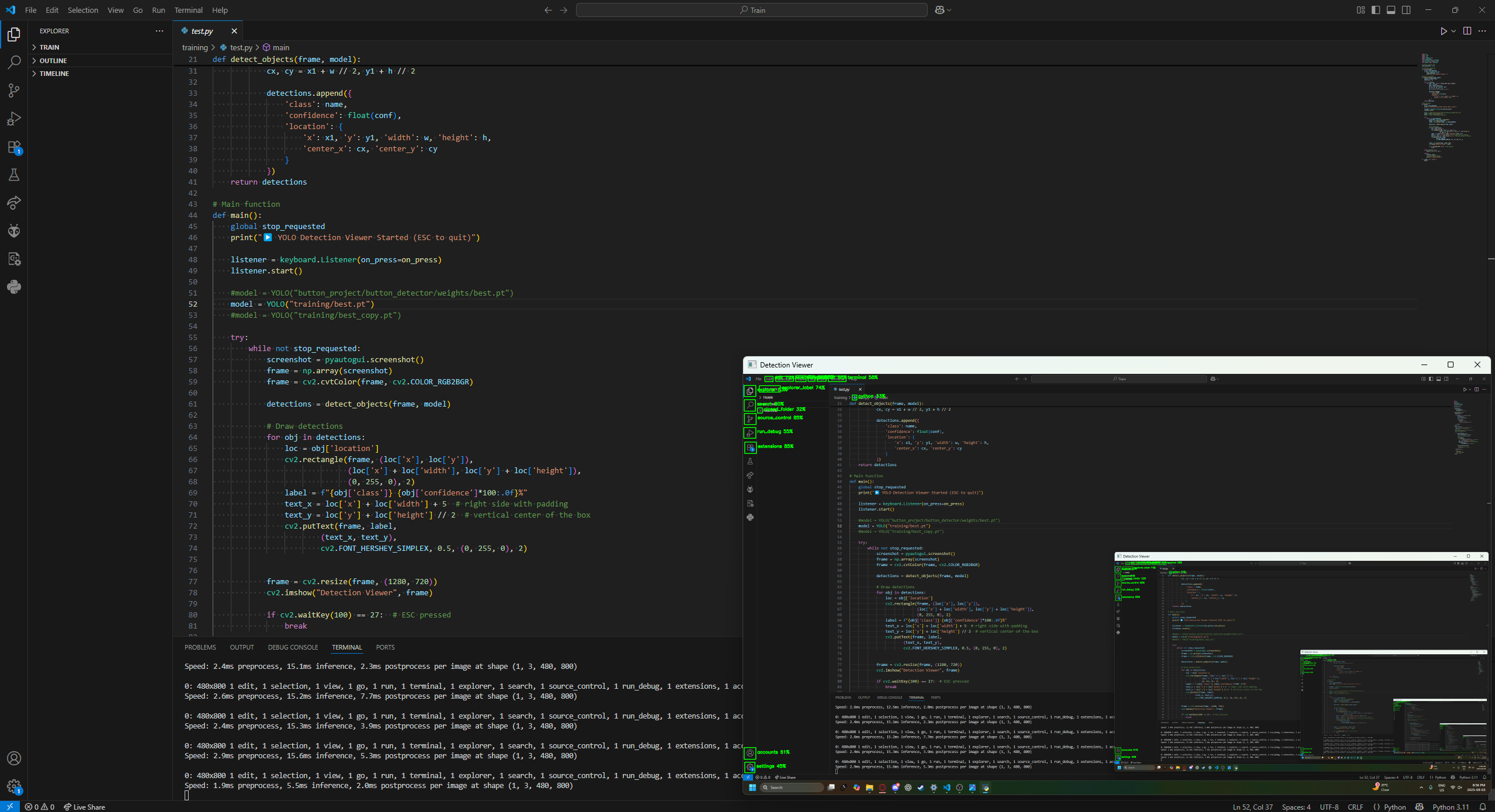

Collected screenshots and real-time GUI imagery to build a dataset of button targets, annotated and preprocessed for training.

-

Trained a custom YOLO model using a personal NVIDIA GPU, enabling efficient local experimentation, faster iterations, and fine-tuning for button detection tasks.

-

Applied YOLO machine learning for real-time button recognition, logging, and automated mouse control, demonstrating practical ML integration with computer vision and system-level interaction.

-

Designed with future extensions in mind, where YOLO detection could work alongside OCR and voice commands to aid visually impaired users in navigating computer interfaces.